Durin Privacy and Product Marketing as Trust Building

Apple

Sells devices that use facial recognition via sensors in “real time/real world” for Face ID and machine learning on photos stored in iCloud

Guiding Principles

Control

Transparency

Fiduciary-like behavior

Privacy Policy

No one reads privacy policies; Apple’s is remarkable: (underlines are mine)

“At Apple, we believe strongly in fundamental privacy rights — and that those fundamental rights should not differ depending on where you live in the world. That’s why we treat any data that relates to an identified or identifiable individual or that is linked or linkable to them by Apple as 'personal data,' no matter where the individual lives. This means that data that directly identifies you — such as your name — is personal data, and also data that does not directly identify you, but that can reasonably be used to identify you — such as the serial number of your device — is personal data. Aggregated data is considered non‑personal data for the purposes of this Privacy Policy."

Linked Data Demo

Back to Apple

Face ID

“Face ID data—including mathematical representations of your face—is encrypted and protected by the Secure Enclave.

… Face ID data doesn’t leave your device and is never backed up to iCloud or anywhere else.

… Apps are notified only as to whether the authentication is successful.”

User Control and Transparency

“If you choose to enroll in Face ID, you can control how it's used or disable it at any time.

HomeKit Secure Video

“Your data is private property... your Home app data is stored in a way that Apple can’t read it. Your accessories are controlled by your Apple devices instead of the cloud, and communication is encrypted end-to-end."

So only you and the people you choose can access your data.

HomeKit Secure Video (cont.)

For facial recognition, Apple doesn’t upload data from your network to its servers. Instead, it relies on faces you’ve already identified in the Photos app.

Apple Takeaways

- Clear writing is clear thinking. Apple’s writing is very clear.

- Documentation is product marketing.(Control and Transparency)

- Local wherever possible.

- Transparency… but more about hardware and software than data requests.

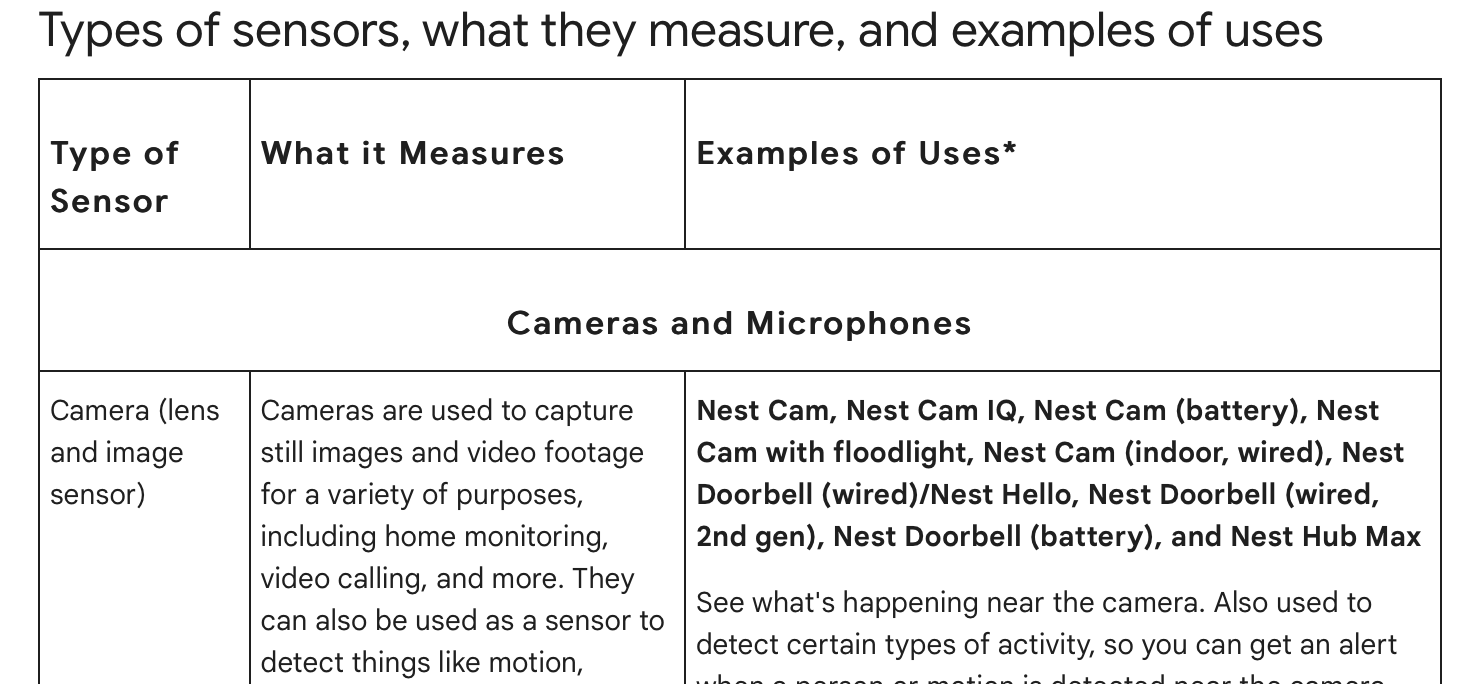

Google nest

Sells products that are more directly competitive to proposed Durin offerings

Facial recognition disabled in Illinois

Privacy Policy

- Little detail

- Changes frequently

- Extraordinarily informal

- Co-mingles concepts

Support/Documentation

Tired

- Pushes compliance work to users

- Not all local

Wired

- Specifics on "Familiar faces" feature

- Third party audits

- “Sensor Glossary”

Ring

Directly competitive devices; could be partners on services

Ring’s Stance on Facial Recognition Technology

“Ring does not use facial recognition technology in any of its devices or services, and will neither sell nor offer facial recognition technology to law enforcement.”

Encryption

- End-to-end Encryption: Not enabled by default

- Significant technical detail on methods used when enabled

"Public Safety" Access Is Transparent...

“Beginning next week, public safety agencies will only be able to request information or video from their communities through a new, publicly viewable post category on Neighbors called Request for Assistance. ... All Request for Assistance posts will be publicly viewable in the Neighbors feed, and logged on the agency’s public profile. This way, anyone interested in knowing more about how their police agency is using Request for Assistance posts can simply visit the agency’s profile and see the post history.”

… Maybe?

In response to a letter from Senator Ed Markey (D-Mass.), Amazon admitted that is has shared video with police without user consent.

Wyze

Directly competitive, de-emphasizing privacy... or really any "policy" issues

Privacy policy

Wyze discloses information about users -- defined loosely:

“In certain circumstances, we disclose (or permit others to directly collect) information about you.”

- Zero mention of Facial Recognition in privacy policy (!) despite selling a product (Friendly Faces)

Security

- Little disclosure of encryption methods

- Unclear where processing takes place, or what happens to non-video data

Implications for Durin

We're in the business of trust, and working in a zero-trust environment.

Policy needs

The really obvious ones

Defining Active v Passive ID forms

Illustrating the policy implications of having different forms and what is possible with each. e.g. ignoring bystanders, processing multi-factors

Security

- On-Device Processing Policy

- Device Storage v Device Memory

- Encryption Policy

Analysis

- What Durin analyzes, when and how

Particularly worth comparing Active and Passive ID

e.g. Actual face representations are turned into a UUID that's not linked/linkable to Faces, we use that to understand regular usage patterns, etc. OR look at aggregated trends, etc.

Deletion

- Does delete mean delete, delink, or something else?

Law Enforcement and Transparency

- Statement on what Durin can/cannot provide

- Law enforcement requests policy

- Legal requirements/subpoenas policy

- Transparency reporting policy

- Requests, subpoenas

- Warrant and National Security Letter Canary Statements

State level specifics

- Illinois Biometrics …

- But also CA, WA, CO, etc. all have active laws that we will need policy (and product) solutions

MVP will need to create both in-product "wizards" and tie into system-wide consent flows

Multi-dwelling units

- Clear understanding of what can be accessed by who, when and how

Data Sharing?

- If any data can be shared, what governs it, including:

- Internal process/audits/pen testing

- Technical standards/safeguards

- Contractual obligations/commitments

Product needs

The really obvious ones

Bystanders

- How do our products categorize and protect them?

System-wide, consistent consent flows

- Users should know

- When they’re being asked to give their consent

- For what they’re being asked to consent

- The implications of their choice

- If their choice is (or how it is) revokable

System-wide, consistent sharing interface

- Users should know

- When they’re sharing

- What they’re sharing

- The implications of their choice

- If their choice is (or how it is) revokable

Policies that can be demonstrably followed help create trust in a zero trust environment

Durin Product Implications Discussion

| Discussion questions |

|---|

| What values do we want our products to espouse? |

| What can we NOT build as it will violate our values? |

| What data must we collect in order to succeed? |

| What is counter-intuitive in collecting data to protect privacy? |

| How do we think about balancing analyzing data for better security with reasonably protecting privacy? |

| Where are privacy v security tradeoffs NOT needed? e.g. where can we get both |

| What is the difference between active and passive ID? |