Durin: Speak Friend and Enter

Integrating privacy and good policy choices into product development from the beginning

Herman Yau & Andrew Gruen

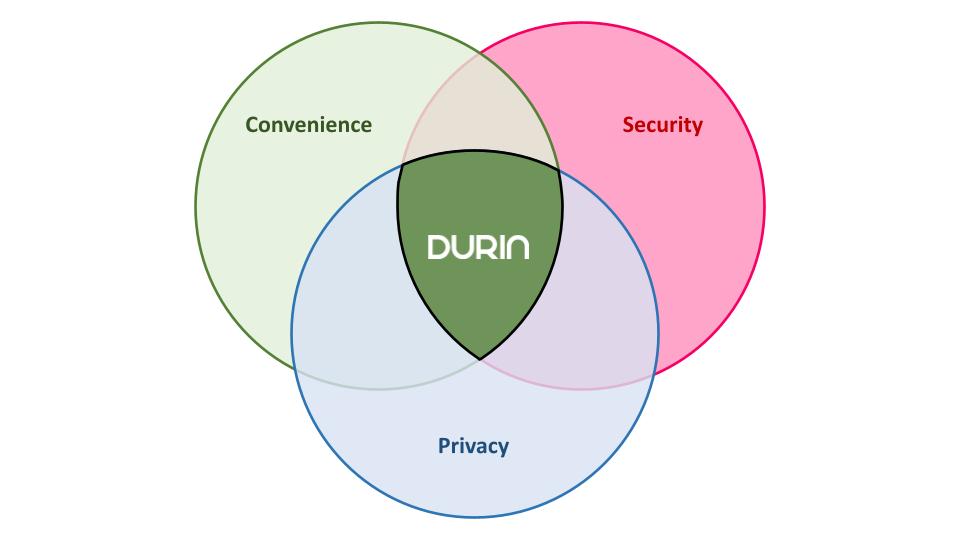

Durin is an identity and access company, bringing the standards and best practices of the digital access experiences into the embodied world of homes and physical spaces.

The Business of Trust

Trust is central to build access control to your home... so what actions can the business take to show not tell it prioritizes users' privacy and building trust?

Latch’s privacy policy is only moderately reassuring. ... But hacks and data leaks happen all the time. ... How much trust do I owe a company ...

Overview:

Review policies

Break assumptions

Thesis: Policy and Process Can Build Trust

How We Make Tradeoffs Shows Our Priorities

- Privacy is important

- Functionality is important

- A healthy business is important

- What comes first?

Convenience, Security and Privacy

Prioritized Policies

- Customer Control

- Encryption

- Local

- Analysis

- Deletion

- Bystanders

Customer Control

Principally, we want to put the customer in control wherever possible, particularly where there are meaningful tradeoffs between values.

Implications of prioritizing customer control

- Explain defaults

- Avoid decision paralysis

- Law Enforcement

- Data Sharing

Encryption

Encryption itself presents users with a series of tradeoffs, which is why it's not our highest principle... but that doesn't mean it's unimportant.

A note on E2EE v "in transit" and "at rest"

Encryption in transit and at rest are always required, and E2EE, with our users holding the keys, is always the goal... but sometimes user choice takes precedence.

Example:

Privacy/Security vs Convenience

E2EE guarantees... or connection to other "smart home" systems?

Local

Great for customer choice—there’s no better way to ensure customer choice than to make sure they physically control their data—but does anyone know or care?

Local whenever possible

- Easy-to-demonstrate adherence to customer control principle

- No honeypot

- Law enforcement AND cybercrime

- Faster

Analysis

What do we process, and how do we think about this systematically?

Data categorization and minimization

Data Categorization

Creating clear categories of data that exist helps to determine if they should be collected

- Face Crops

- Faceprints

- RF IDs (e.g. MAC addresses)

- Historical usage (e.g. access logs)

- External signals from partner services

Data Minimization

Developing categories helps to make data minimization easier; rather than trying to make decisions about every individual data point possible, we can rely on the categories as guides.

Example:

New user registration

When we register a new user what individual pieces of data do we collect, where do we store them, and what processing do we do with them?

- Profile photos

- Faceprints

- Encryption keys

Deletion

Delete means delete, not de-link... Unless doing so reduces privacy or is in direct, documentable conflict with a higher principle

Deletion v de-linking

- Deletion: removing data

- De-linking: removing linkage between the data subject and the data

Conflicts with other values

- Some privacy enhancing technology (e.g. differential privacy) would mean that deletion reduces privacy guarantees

- Operational data (e.g. percent of deployed devices on latest firmware) help enhance other values and can be meaningfully collected if de-linked.

Bystanders

Durin is about putting the user in control of their own home—which is not the same as giving them the opportunity to create a dragnet that captures and permanently stores information about every human who walks by.

Example:

Letter carriers

A customer might want:

- To know when USPS gets delivered

- To know when a commercial delivery service brings a package

- If it was an unexpected carrier

A customer doesn't need:

- A face crop of the carrier

- Any personal identification about the carrier

A GDPR-inspired product-development ... solution?

Example bystander questionnaire

- Clear purpose specification?

- Data limitation?

- Opportunity to Inform? (likely not)

- Specific bystander detection opportunity being created?

- Is bystander data being retained? If so, why? (related to safeguards)

- Specific safeguards (organizational or technical) relating to this specific detection opportunity?

- Are these specific risks to bystanders creating any more risk than what was already the case in the base product?

- Do the safeguards entirely mitigate the additional risk to bystanders?

- If not, why are we building this feature anyway?

Discussion

Open questions

- Is the bystander section a policy unto itself, or an implementation of policies? Is it appropriately prioritized?

- Is an explicit "privacy" policy needed in here? If so, what does it do and where does it go?

- What processes and documentation are appropriate for implementation of these policies at a company with three FTEs? And how does that change as it scales?

- All of this is quite separate from legal compliance (e.g. the product can't operate in Illinois because of BIPA). Thoughts?

- Consent flows: we need consistent ones that are clear and people understand -- what's the state of the art?